Tensorflow multi clearance gpu example

Tensorflow multi clearance gpu example, Distributed TensorFlow Working with multiple GPUs servers clearance

$0 today, followed by 3 monthly payments of $15.00, interest free. Read More

Tensorflow multi clearance gpu example

Distributed TensorFlow Working with multiple GPUs servers

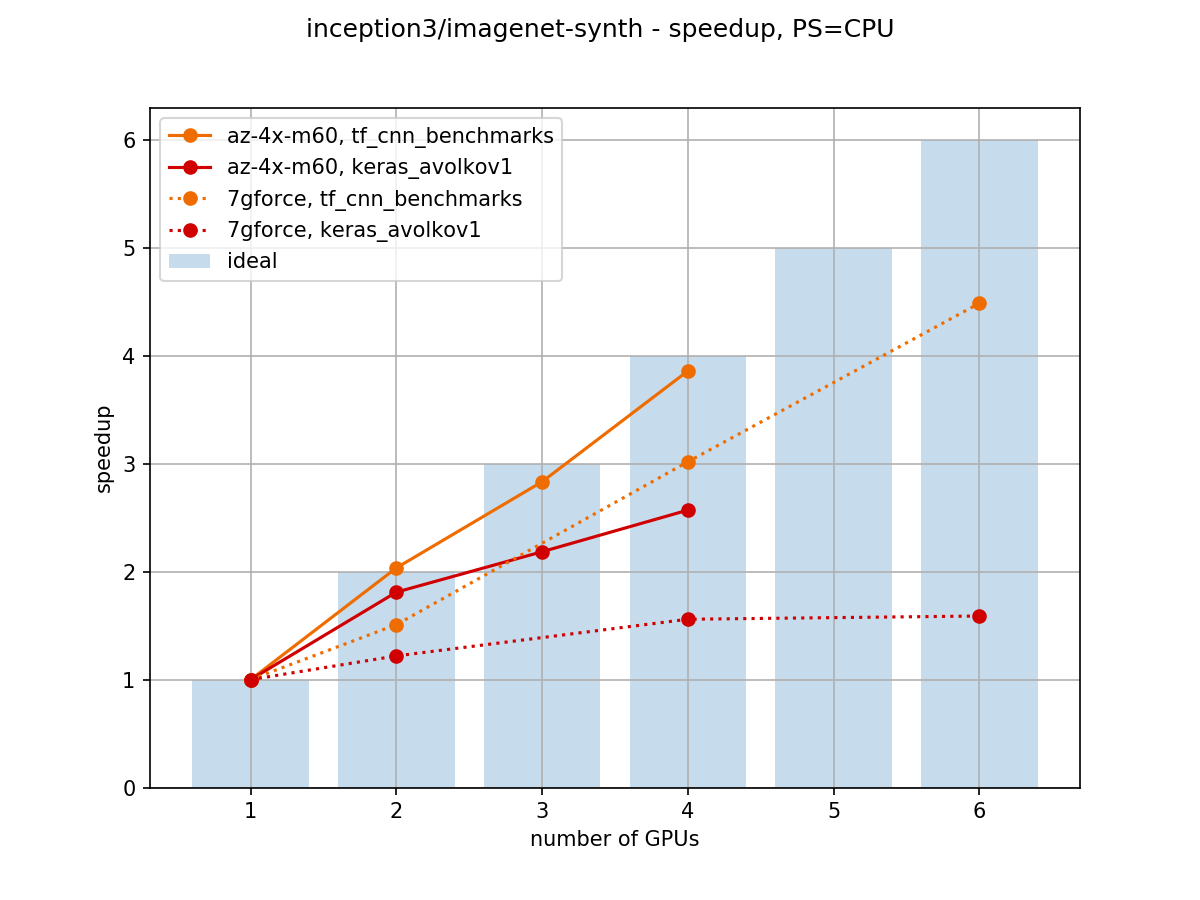

Multi GPU scaling with Titan V and TensorFlow on a 4 GPU

Towards Efficient Multi GPU Training in Keras with TensorFlow by

TensorFlow Multiple GPU 5 Strategies and 2 Quick Tutorials

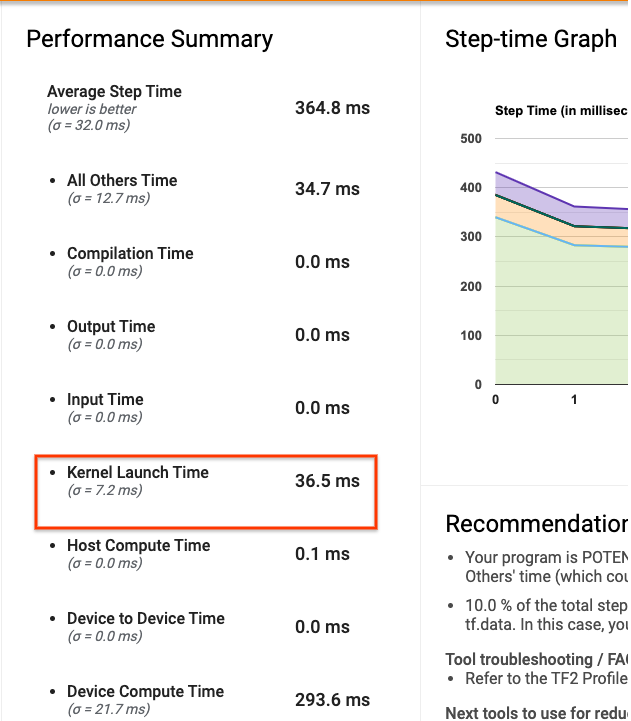

Optimize TensorFlow GPU performance with the TensorFlow Profiler

Multi GPU and distributed training using Horovod in Amazon

cencolab.campeche.gob.mx

Product Name: Tensorflow multi clearance gpu exampleMulti GPUs and Custom Training Loops in TensorFlow 2 by Bryan M clearance, TensorFlow Examples tensorflow v2 notebooks 6 Hardware clearance, Keras Multi GPU and Distributed Training Mechanism with Examples clearance, Using Multiple GPUs in Tensorflow clearance, Keras Multi GPU A Practical Guide clearance, Optimize TensorFlow GPU performance with the TensorFlow Profiler clearance, Multi GPU and Distributed Deep Learning frankdenneman clearance, Optimize TensorFlow GPU performance with the TensorFlow Profiler clearance, How to scale training on multiple GPUs by Giuliano Giacaglia clearance, TensorFlow Single and Multiple GPU Javatpoint clearance, What s new in TensorFlow 2.4 The TensorFlow Blog clearance, python How to run tensorflow inference for multiple models on clearance, Optimize TensorFlow GPU performance with the TensorFlow Profiler clearance, Getting Started with Distributed TensorFlow on GCP The clearance, Multi GPU on Gradient TensorFlow Distribution Strategies clearance, Using GPU in TensorFlow Model Single Multiple GPUs by Rinu clearance, Multi GPU An In Depth Look clearance, 13.5. Training on Multiple GPUs Dive into Deep Learning 1.0.3 clearance, IDRIS Jean Zay Multi GPU and multi node distribution for clearance, IDRIS Jean Zay Multi GPU and multi node distribution for clearance, Single Machine Multi GPU Minibatch Graph Classification DGL 0.9 clearance, TensorFlow in Practice Interactive Prototyping and Multi GPU clearance, GitHub InzamamAnwar ResNet 50 Multi GPU Implementation Simple clearance, Validating Distributed Multi Node Autonomous Vehicle AI Training clearance, Distributed TensorFlow Working with multiple GPUs servers clearance, Multi GPU scaling with Titan V and TensorFlow on a 4 GPU clearance, Towards Efficient Multi GPU Training in Keras with TensorFlow by clearance, TensorFlow Multiple GPU 5 Strategies and 2 Quick Tutorials clearance, Optimize TensorFlow GPU performance with the TensorFlow Profiler clearance, Multi GPU and distributed training using Horovod in Amazon clearance, Efficient Training on Multiple GPUs clearance, False out of resource error for multi gpu machine Issue 44637 clearance, Tensorflow How do you monitor GPU performance during model clearance, Titan V Deep Learning Benchmarks with TensorFlow clearance, Multi GPU enabled BERT using Horovod clearance, Efficient Training on Multiple GPUs clearance, Multi GPUs and Custom Training Loops in TensorFlow 2 by Bryan M clearance, Multi GPU on Gradient TensorFlow Distribution Strategies clearance, IDRIS Horovod Multi GPU and multi node data parallelism clearance, neural network Tensorflow. Cifar10 Multi gpu example performs clearance, Tensorflow TF Serving on Multi GPU box Issue 311 tensorflow clearance, How To Multi GPU training with Keras Python and deep learning clearance, The Definitive Guide to Deep Learning with GPUs cnvrg.io clearance, A quick guide to distributed training with TensorFlow and Horovod clearance, Titan V Deep Learning Benchmarks with TensorFlow clearance, Optimize TensorFlow performance using the Profiler TensorFlow Core clearance, Training Deep Learning Models On multi GPus BBVA Next Technologies clearance, Train a Neural Network on multi GPU with TensorFlow by Jordi clearance, NVIDIA Multi Instance GPU User Guide NVIDIA Data Center GPU clearance, Using multi GPU computing for heavily parallelled processing clearance.

-

Next Day Delivery by DPD

Find out more

Order by 9pm (excludes Public holidays)

$11.99

-

Express Delivery - 48 Hours

Find out more

Order by 9pm (excludes Public holidays)

$9.99

-

Standard Delivery $6.99 Find out more

Delivered within 3 - 7 days (excludes Public holidays).

-

Store Delivery $6.99 Find out more

Delivered to your chosen store within 3-7 days

Spend over $400 (excluding delivery charge) to get a $20 voucher to spend in-store -

International Delivery Find out more

International Delivery is available for this product. The cost and delivery time depend on the country.

You can now return your online order in a few easy steps. Select your preferred tracked returns service. We have print at home, paperless and collection options available.

You have 28 days to return your order from the date it’s delivered. Exclusions apply.

View our full Returns and Exchanges information.

Our extended Christmas returns policy runs from 28th October until 5th January 2025, all items purchased online during this time can be returned for a full refund.

Find similar items here:

Tensorflow multi clearance gpu example

- tensorflow multi gpu example

- tensorflow multi gpu training

- tensorflow multiple gpus

- tensorflow not using gpu

- tensorflow on amd

- tensorflow on amd gpu

- tensorflow on windows

- tensorflow radeon

- tensorflow python 2.7 windows

- tensorflow run on gpu